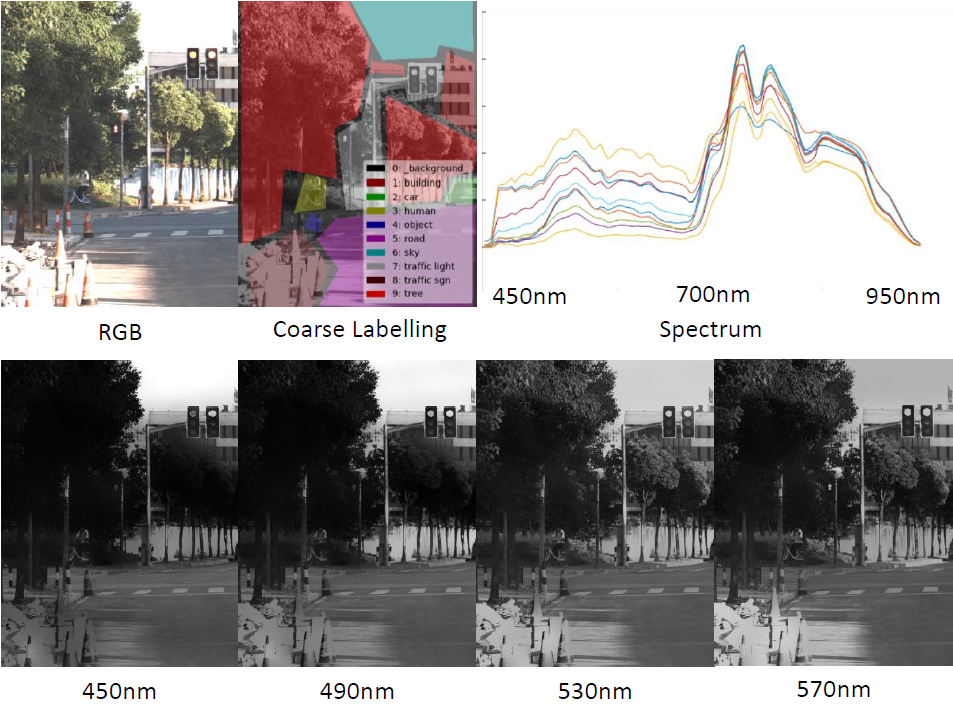

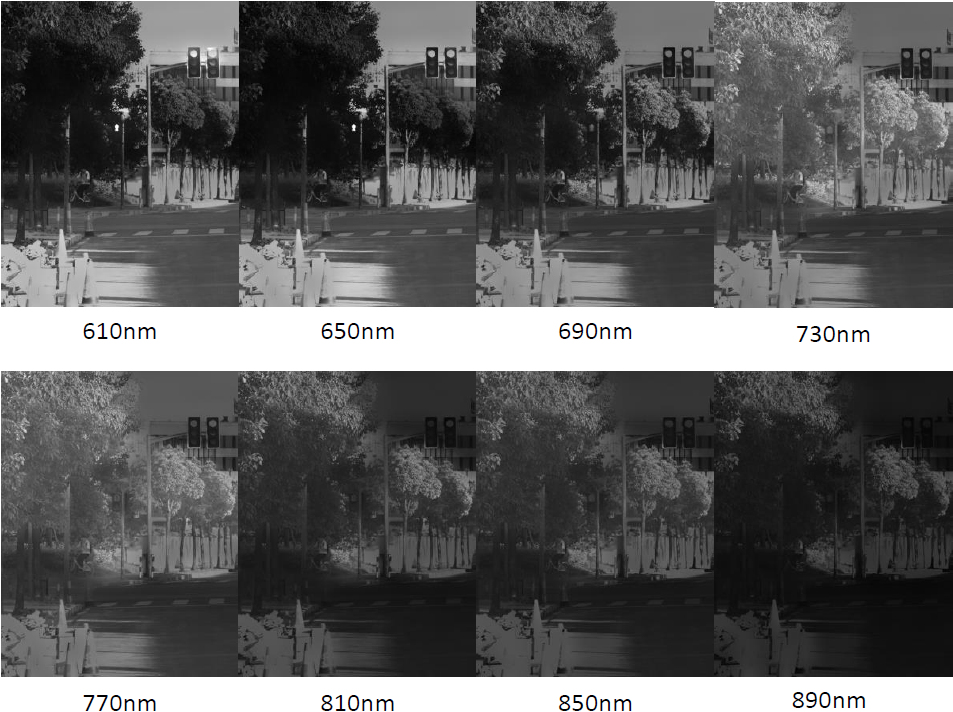

Fig.1. An example of the Hyperspectral City 1.0 Dataset,

we provide high resolution hyperspectral data in typical driving scenes with coarse semantic label.

Fig.2. Outdoor data capturing in Shanghai. The camera system is top-mounted.

Physics Based Vision Meets Deep Learning

Light traveling in the 3D world interacts with the scene through intricate processes before being captured by a camera. These processes result in the dazzling effects like color and shading, complex surface and material appearance, different weathering, just to name a few. Physics based vision aims to invert the processes to recover the scene properties, such as shape, reflectance, light distribution, medium properties, etc., from the images by modeling and analysing the imaging process to extract desired features or information.

There are many popular topics in physics based vision. Some examples are shape from shading, photometric stereo, reflectance modelling, reflection separation, radiometric calibration, intrinsic image decomposition, and so on. As a series of classic and fundamental problems in computer vision, physics based vision facilitates high-level computer vision problems from various aspects. For example, the estimated surface normal is a useful cue for 3D scene understanding; the specular-free image could significantly increase the accuracy of image recognition problem; the intrinsic images reflecting inherent properties of the objects in the scene substantially benefit other computer vision algorithms, such as segmentation, recognition; reflectance analysis serves as the fundamental support for material classification; and, bad weather visibility enhancement is important for outdoor vision systems.

In recent years, deep neural networks and learning techniques show promising improvement for various high-level vision tasks, such as detection, classification, tracking, etc. With the physics imaging formation model involved, successful examples can also be found in various physics based vision problems (please refer to the references section).

When physics based vision meets deep learning, there will be mutual benefits. On one hand, classic physics based vision tasks can be implemented in a data-fashion way to handle complex scenes. This is because, a physically more accurate optical model can be too complex as an inverse problem for computer vision algorithms (usually too many unknown parameters in one model), however, it can be well approximated providing a sufficient collection of data. Later, the intrinsic physical properties are likely to be learned through a deep neural network model. Existing research has already exploited such benefit on luminance transfer, computational stereo, haze removal, etc.

On the other hand, high-level vision task can also be benefited by awareness of the physics principles. For instance, physics principles can be utilized to supervise the learning process, by explicitly extracting the low-level physical principles rather than learning it implicitly. In this way, the network could be more accurate more efficient. Such physics principles have already presented the benefits in semantic segmentation, object detection, etc. Therefore, we believe when physics based vision meets deep learning both low level and high level vision task can get the benefits. Furthermore, we believe that there are many computer vision tasks that can be tackled by solving both physics based vision and high level vision in a joint fashion to get more robust and accurate results which cannot be achieved by ignoring each side.

Hyperspectral City

We propose a semantic segmentation challenge for urban autonomous driving scene which utilizes newly developed hyperspectral camera. The motivation is to compensate the insufficient visual quality problem of existing dataset. Particularly, the CityScape[1] dataset provides only extremely washed out RGB images. To solve this, we endeavour to propose the new dataset which adopts multi-channel visual input. Our new dataset, can provide the following benefits: 1. properly balanced and colourful visual input. 2. We can analyse and see visual properties which cannot be seen from RGB channels. 3. We can robustly handle night scenes, thanks to the near infrared band. 4. We can robustly handle water phenomenon including rain and fog, because of the absolution behaviour in the infrared band.

For the initial release of the dataset, we decide to propose the task of semantic segmentation with coarse labeling. We release 367 frames hyperspectral images with coarse labeling for training and 68 frames with fine labeling for testing.