Data Collection

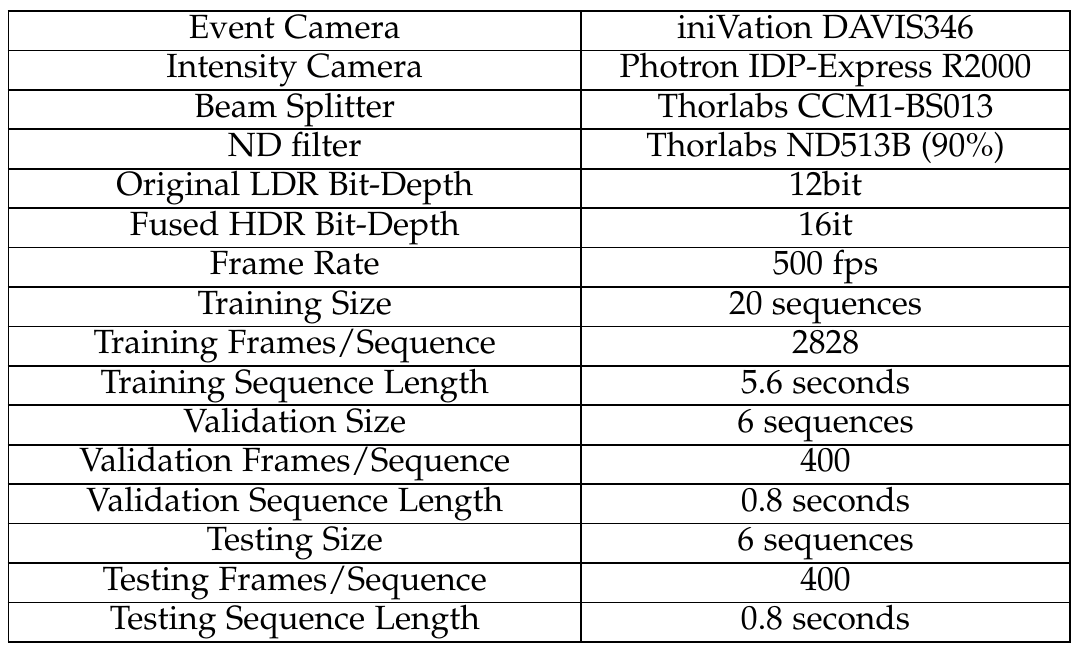

For the challenge, a unique dataset has been collected, providing real paired Event-to-HDR data for the purpose of high-speed HDR video reconstruction from event streams. The collection process involves an integrated system designed to simultaneously capture high-speed HDR videos and corresponding event streams. This is achieved by utilizing an event camera to record the event streams, alongside two high-speed cameras that capture synchronized Low Dynamic Range (LDR) frames. These LDR frames are later fused to create High Dynamic Range (HDR) frames. The careful alignment of these cameras within the system ensures the accurate synchronization of the high-speed HDR videos with the event streams, offering a robust dataset for the challenge participants.

Dataset characteristics

Real high-bit HDR.

Unlike existing methods that primarily leverage the HDR feature of event data, our dataset includes real high-bit HDR data. This data is created by fusing two images with different exposures using an HDR fusion strategy. This inclusion is crucial as most current methods do not use real high-bit depth HDR data for training, limiting their ability to generate such HDR formats.

Paired Event-to-HDR dataset

While existing datasets often contain only paired testing data created by simulating a virtual camera's trajectory, this dataset provides real paired training data. This approach overcomes the domain gap that synthetic training data typically has with real-world testing scenarios. This dataset captures genuine paired training data, offering a more realistic and applicable training environment.

Highspeed.

In alignment with the high-speed nature of event streams, our videos are captured with a high-speed camera at a frame rate of 500fps. This speed significantly exceeds that of APS or any other event-to-HDR dataset, making our dataset uniquely suited for applications requiring high temporal resolution.